I’ve been quiet for a while, partially because there’s not much been going on. I’ve been busy but with more normal everyday stuff and partially because I’ve switched focus in my day job and I’ve been caught up in a new language, new concepts.

Before I get into it, indulge me. This a personal blog. Everything that appears here is my own opinion and doesn’t represent any position of my employer.

Several posts ago I wrote about service monitoring. It was actually intended to be a small intro into a series about service management that never got beyond several drafts. More recently I was discussing what I see as a failure of the IT industry in general; overall the industry is product focused. Every product is sold with new features as the selling point; do this better, look at this new wavy line. Business units are organised into teams (aka silos) each focused on making their software better. Nothing wrong with this at all, it’s been very successful but that’s not whats happening in the wider world.

A long time ago (in a galaxy far far away), or, about 8 years ago in Daventry (Northamptonshire, UK), I was presenting and took 5 minutes out to discuss what I termed ‘the commoditisation of IT’; the wider adoption of IT into general public usage was in part driven by the simplification at the point of consumption and the trend towards subscriptions. I used the Apple iPhone and App store as an example how complex IT was now common across different generations, easily usable, giving rise to expectations that this is how IT is done, which was a vastly different perception to what we, as a collection of IT professionals, knew actually occurred.

In the following years a number of my observations have been accurate; throw enough darts and you’ll hit the bullseye occasionally. The world is moving towards subscription. There’s an excellent book called ‘Subscription’ by Tien Tzuo that’s much more articulate about the direction of services that I will ever be.

Businesses continue to look for opportunities to exploit, to generate money and deliver goods and services. With the COVID pandemic changing the nature of how businesses go to market, changing what and how consumers consume it’s becoming apparent that more flexible ways of working are required.

What has this got to do with IT?

IT isn’t immune to COVID. Some products will become less important, some products will rapidly become more important (remote productivity and collaborate tools such as Zoom for example) and this will impact on how IT departments deliver these products. This change isn’t new because of COVID. It was already happening, but COVID is simply increasing the speed that it needs to happen.

Professional Services, as typically performed today, is dead.

The last two decades have seen the creation of in-house IT departments with vendor Professional Services teams delivering product focused solutions into production. This would typically be based around the simplified lifecycle:

- Design

- Install

- Configure

- Knowledge Transfer

At some point the lifeycle repeats and we come back and do it again.

Until that time the customer IT teams are often on their own, occasionally interfacing with support teams. Product adoption into daily use can be pitifully low. Vendors ship more complex SKU’s with more products bundles together and the ‘value’ products, if they are installed at all, get ignored. I’ve heard it called shelf-ware, as in they never leave the corporate shelf.

The products, individually very powerful, don’t really link up, because they are often developed by separate business units, brought together under a singular brand name via corporate acquisition, resulting in strange bedfellows and outright incompatibility. This mixture of paper based excellence and real world disappointment creates inefficiencies and is accentuated by siloed IT responsibilities. The result is the business needing and trying to go faster, whilst the IT is moving slower.

If any of this is shocking, it shouldn’t be. We’ve known about this for a while.

Back in 2006 Amazon launched AWS. Subscription based IT. Someone else would host your infrastructure, featuring a fairly simple interface, similar to an App store experience, with a quick delivery time. All you needed was a credit card for the ongoing cost and the game changed.

Today when launching a new initiative a business needs to decide if it wants to launch using a public cloud provider or the using its internal IT capability. There are many factors to which is preferred but the general industry trend is that cloud based operational expenditure is better. In my opinion businesses prefer the simplicity, cost and speed of a hosted solution.

And this impacts Professional Services how?

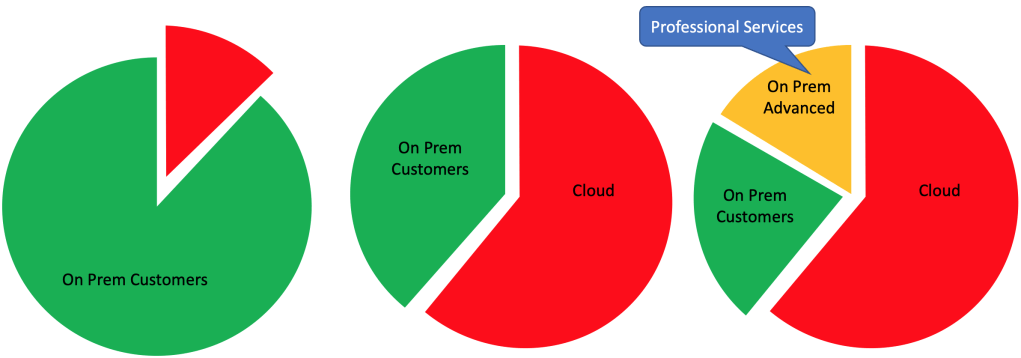

As more businesses make the decision to use public cloud, as more businesses become comfortable with hosted solutions, the pool of companies that want in-house IT shrinks. Eventually it will be companies that operate at a scale, or operate within a market where compliance / regulatory requirements means true public cloud isn’t viable. That’s probably a small pool of potential customers.

Professional Services is primarily delivering Design / Install / Configure services.

Design

Everyone has best practices. In reality this means that unless you have some strange requirement you will be following best practices. It’s in almost every design document; ‘Unless a documented requirement prohibits it, follow best practices’. Therefore unless the business requirements are entirely left-field most designs look very similar. Professional Services are finding those areas that can’t match the best practices and are helping customise the solutions to compensate.

With Public Cloud based solutions there’s probably some network design around ensuing on-premise to cloud based connectivity and maybe some inter-cloud connectivity but intra-cloud connectivity will be handled via the provider and you won’t typically be architecting their solution. If it doesn’t meet your requirements then you go find a cloud that does.

Install and Configure

This will get automated. We’re already heading in this direction with Infrastructure-as-code. it needs some polish as the monolithic older applications get modernised but this is inevitable. The configuration will also move into Lifecycle Management software and capabilities.

This is what occurs in VMware’s VCF today. The VCF deployment tool deploys vRealize Lifecycle Manager which in turns deploys the rest of the vRealize suite and begins to lifecycle manage those deployments.

With cloud-based providers you don’t install anything. Create your account, grab your authentication token and off you go to the races. If you encounter any problems there’s a chat window for you to speak to a AI-augmented support engineer.

Knowledge Transfer

This still needs to be done, but as the tools become more intelligent and automated the focus of the knowledge transfer needs to change.

So I’ll repeat my opinion. Professional Services, as typically performed today, is dead.

The successful delivery of IT is a complex dance of three pillars. People, Process and Technology. As I’ve mentioned previously the IT industry is generally product (Technology) focused.

With the Technology moving heavily into automation and Infrastructure-as-code that means we need to start looking at People and Process. Some companies are good at this, most are not, and I believe Professional Services needs to get into the game. This is the new knowledge transfer. How to ensure that IT departments are structured to compete with the Cloud based providers, delivering IT as smoothly as AWS / Azure can. To ensure that a valid, IT Service Management function, is wrapped around IT operations.

To do this we need to develop new language. Think about things in different ways, to deliver IT as a set of services with clear lines of management. IT Service Management. To help the customer organise themselves and build their existing capabilities into services that can be linked back to business requirements.

And this is what I’ve been doing. I’ve been thinking about it for a while and now I’ve grabbed an opportunity to dive headlong into this world and get immersed in the new terminology and new ways of thinking about IT and I’ll be posting in my usual timely fashion about this new world.

So long live Professional Services. The kings of transformation.