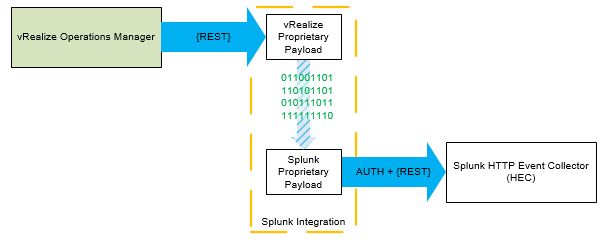

vRealize Operations is a fantastic data analysis tool from VMware, but its ability to talk to other products has always been limited to a few options.

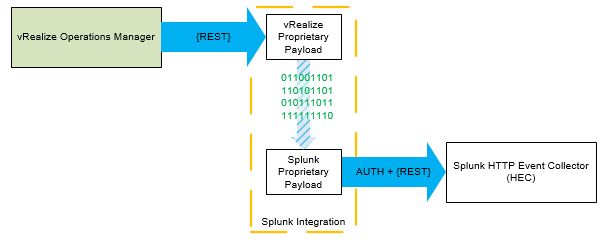

Recently I needed to make vROps forward on its alerts to the log analysis tool Splunk using a Splunk HTTP Event Collector (HEC). This means taking the REST output from vROps and forwarding it on to the HEC;

VMware call this a Web-Shim and the basic process is outlined in VMware’s John Dias excellent blog series. That blog series discusses using vRealize Orchestrator, so it was time to get learning Python.

I broke this into 4 stages:

- Generate a vROps output

- Capture the vROps output in a webshim

- Generate a Splunk input

- Use python to turn the vROps output into the Splunk input

I didn’t figure this out in this order, it’s just easier to read it in this order!

Stage 1 – Generate a vROps output

Lets start at the beginning. We want to send an alert to Splunk via the HEC. I could wait around for vROps to send some alerts, or I can build one I can trigger as required.

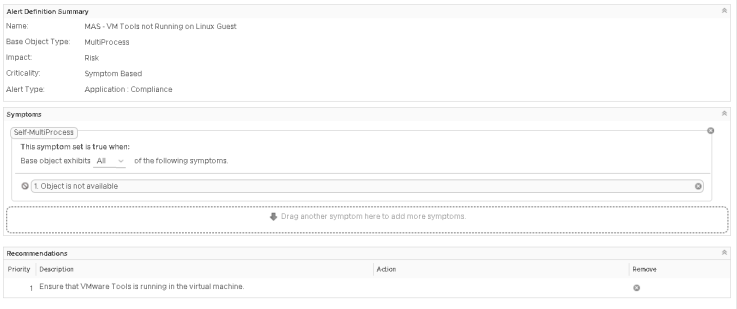

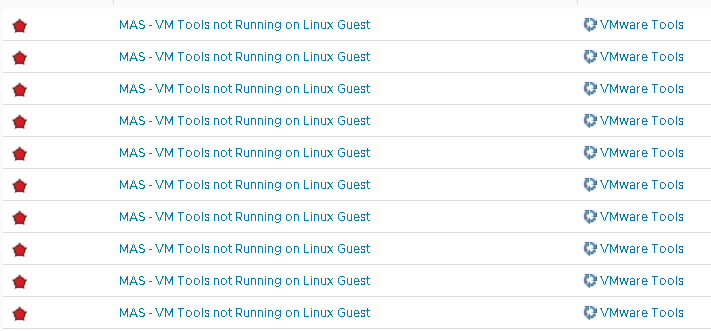

I like to have an alert that monitors the VMware tools service. I then stop and start the VMware Tools service as required which triggers an alert:

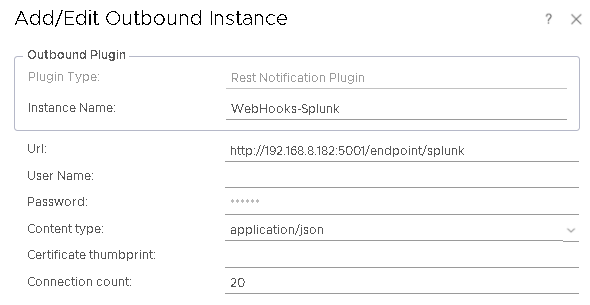

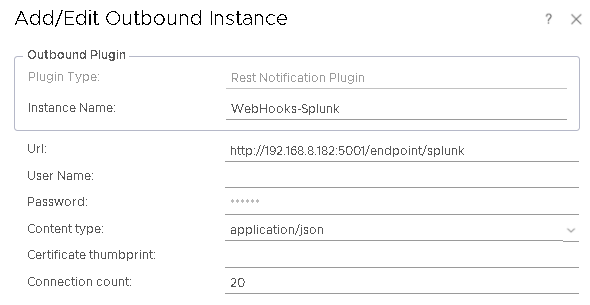

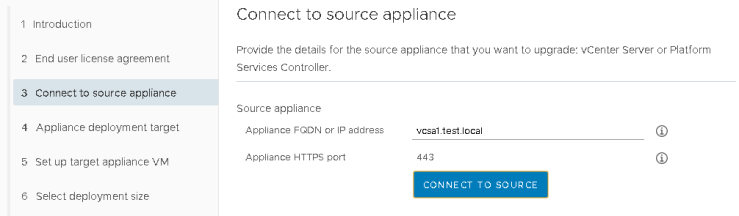

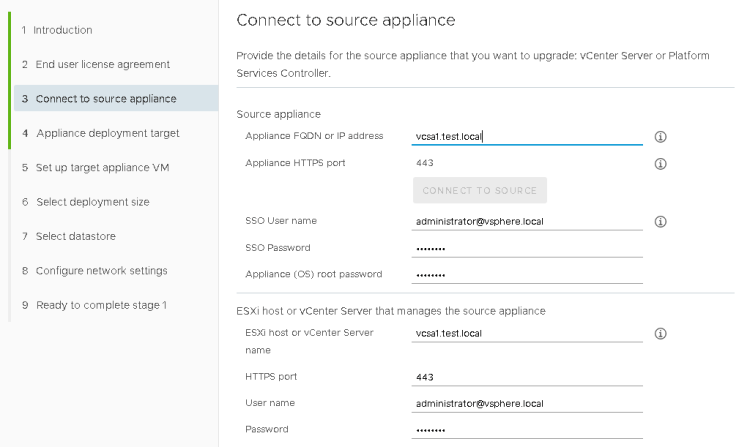

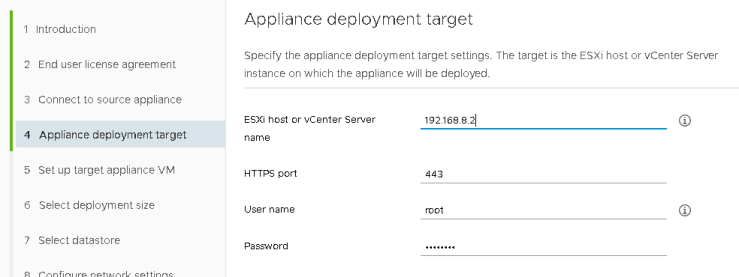

And now it’s time to configure vROps to send the alert notification. From inside vROps you will need to configure an outbound server:

This is a fairly basic configuration, just ensure that the URL points to the name of the function in the Python code.

Configure a notification event. I used a notification that will send everything to Splunk:

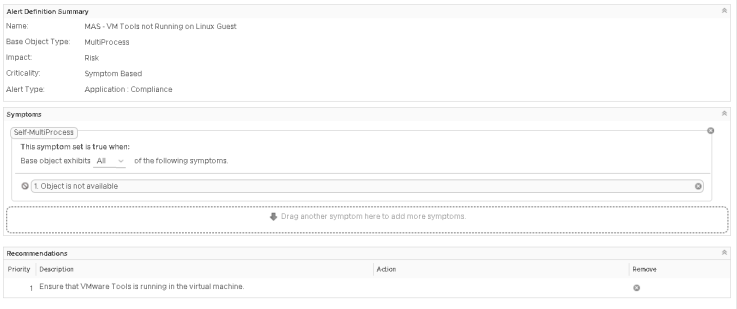

Finally I have an EPOps alert that I can trigger on demand. I have added a simple recommendation to this alert:

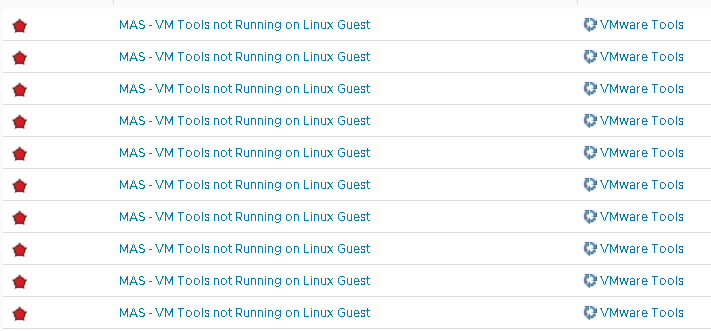

Now to generate this alert on demand, I go to my test box (vSphere Replication with VMware Tools and EndPoint Operations), start vRealize Operations EPOps agent, and then stop the VMware Tools service. This will trigger the alert, which will appear in my shim. Then just restart VMware Tools to cancel the alert.

This can be used multiple times to test the code:

Stage 2 – Capture the vROps Output in a webshim

Having originally followed and copied John’s web series I already had a PhotonOS environment that could host the webshim code. Digging deeper in this example code I discovered that it was possible to reuse pieces of VMware’s code to capture and parse the vROps output.

NOTE: I did discover this after almost pretty much writing something vaguely similar and functional but VMware’s code was just better!

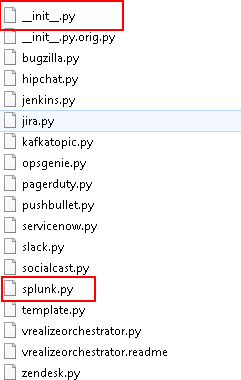

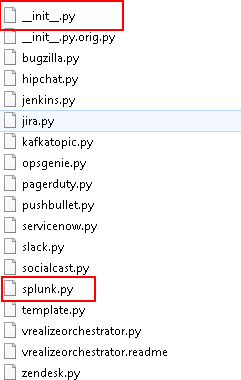

From the VMware code I took the basic folder layout and modified the following file:

This file has the various logic for parsing both LogInsight and vRealize Operations input, along with the sample webshims. I modified this file at the bottom where it imports the various webshim Python code:

# Import individual shims

#import loginsightwebhookdemo.bugzilla

#import loginsightwebhookdemo.hipchat

#import loginsightwebhookdemo.jenkins

#import loginsightwebhookdemo.jira

#import loginsightwebhookdemo.kafkatopic

#import loginsightwebhookdemo.opsgenie

#import loginsightwebhookdemo.pagerduty

#import loginsightwebhookdemo.pushbullet

#import loginsightwebhookdemo.servicenow

#import loginsightwebhookdemo.slack

#import loginsightwebhookdemo.socialcast

#import loginsightwebhookdemo.template

#import loginsightwebhookdemo.vrealizeorchestrator

#import loginsightwebhookdemo.zendesk

# Shim for Splunk...

import loginsightwebhookdemo.splunk

I commented out the other webshims and added the splunk webshim code.

Then I created the file splunk.py and placed it into the loginsightwebhookdemo folder:

It’s time to start writing some Python code.

To enable use of the functions included in the __init__.py file, I added the following lines to the top of the splunk.py.

#!/usr/bin/env python

from loginsightwebhookdemo import app, parse

from flask import request

I then created the Splunk webshim entry point into the function:

@app.route("/endpoint/splunk", methods=['POST'])

@app.route("/endpoint/splunk/<ALERTID>", methods=['POST','PUT'])

def splunk(ALERTID=None):

Finally I call the parse function from __init__.py to take the incoming payload (request) and produce a Python dictionary (alert):

alert = parse(request)

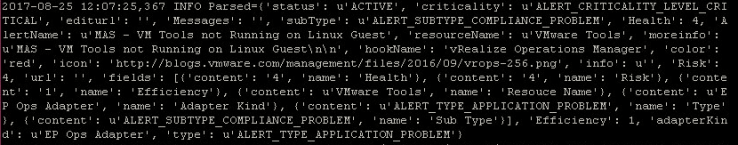

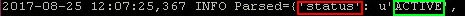

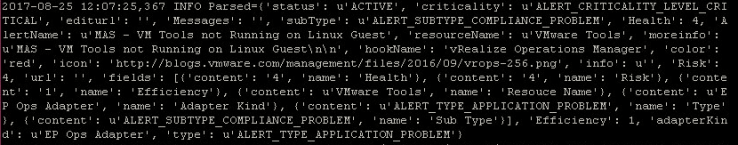

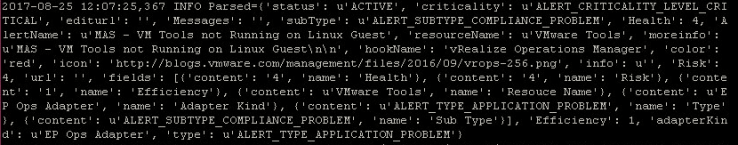

If you look at this parse function in __init__.py you can see that it outputs to the screen the information that it is passed:

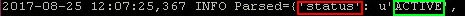

logging.info("Parsed=%s" % alert)

Finally close the Python function block:

return

This completes the necessary code basic Python code:

#!/usr/bin/env python

# These are integration functions written by VMware

from loginsightwebhookdemo import app, parse

from flash import request

# This is the splunk integration function

@app.route("/endpoint/splunk", methods=['POST'])

@app.route("/endpoint/splunk/<ALERTID>", methods=['POST','PUT'])

def splunk(ALERTID=None):

# Retrieve fields in notification, using pre-written VMware function

alert = parse(request)

return

With everything now configured, I start the Flask webserver using:

python runserver.py 5001

Once that’s running trigger the alert. The splunk code will be called with the vROps payload output to the screen:

Stage 3 – Generating a Splunk input

Splunk helpfully outline how to generate entries from the command line for the HEC:

curl -k -u "x:<token>" https://<host>:8088/services/collector -d '{"sourcetype": "mysourcetype", "event":"Hello, World!"}'

Let’s take a look at what this needs

This refers to the authorisation token, which needs to be created from Splunk

This refers to the HEC URL. Simply replace <host> with the FQDN of your HEC server.

- -d ‘{“sourcetype”:”mysourcetype”,”event”:”hello world”}’

Putting this together will generate the ubiquitous Hello World message in Splunk:

Excellent, now we need to programatically do the same.

What you don’t want to do is lots of trial and error, work out its the requests function within Python and then write a cheap, quick and dirty version of the callapi function in __init__.py. What you want to do is just use the callapi function in __init__.py that VMware provide. This has the following definition:

def callapi(url, method='post', payload=None, headers=None, auth=None, check=True)

Using my previous trial and (mostly) error I know what is needed:

The URL of the Splunk HEC, https://<host>:8088/services/collector

We want to POST the message to the Splunk HEC

The message “hello world“

The Splunk token that is used for authentication, “x:<token>”

The Splunk authentication token is passed as a header, so this will be NONE

This relates to SSL certificate checking, which I want to ignore initially, so this will be FALSE

To write this into the Python splunk.py file.

Adjust the import lines to include the callapi and json functions:

from loginsightwebhookdemo import app, parse, callapi

from flask import request, json

To provide some portability to the code use constants to hold the values for the Splunk HEC URL and Token:

# This is the Splunk HEC URL

SPLUNKHECURL = "https://<FQDN>:8088/services/collector/event"

# This is the token to allow authentication into Splunk HEC

# Keep this secret, as anyone with it can inject information into Splunk

SPLUNKHECTOKEN = "Splunk 1234X12X-123X-XXXX-XXXX-12XX3XXX1234"

Inside the splunk function we need to build the necessary header and body of the call to the Splunk HEC.

Add a header variable that holds the HEC authorisation token:

MESSAGEHEADER = {'Authorization': SPLUNKHECTOKEN}

Create the body of the Splunk message:

MESSAGEDATA = {

"sourcetype": "txt",

"event": { "hello world" },

}

Finally add the callapi call to the return line so the function will close out this function by outputting any return value from using the callapi function:

# Post the message to Splunk and exit the script

return callapi(SPLUNKHECURL, 'post', json.dumps(MESSAGEDATA), MESSAGEHEADER, None, False)

The body of the splunk.py file now looks like:

#!/usr/bin/env python

# These are integration functions written by VMware

from loginsightwebhookdemo import app, parse, callapi

from flask import request, json

# This is the Splunk HEC URL

SPLUNKHECURL = "https://<FQDN>:8088/services/collector/event"

# This is the token to allow authentication into Splunk HEC

# Keep this secret, as anyone with it can inject information into Splunk

SPLUNKHECTOKEN = "Splunk 1234X12X-123X-XXXX-XXXX-12XX3XXX1234"

# This is the splunk integration function

@app.route("/endpoint/splunk", methods=['POST'])

@app.route("/endpoint/splunk/<ALERTID>", methods=['POST','PUT'])

def splunk(ALERTID=None):

# Retrieve fields in notification, using pre-written VMware function

alert = parse(request)

MESSAGEHEADER = {'Authorization': SPLUNKHECTOKEN}

MESSAGEDATA = {

"sourcetype": "txt",

"event": { "hello world" },

}

# Post the message to Splunk and exit the script

return callapi(SPLUNKHECURL, 'post', json.dumps(MESSAGEDATA), MESSAGEHEADER, None, False)

Now when vROps sends the notification it will be parsed and displayed to the screen and a Hello World output will appear in the Splunk HEC.

Stage 4 – Use python to turn the vROps output into the Splunk input

Nearly there!

All the necessary code is actually done, the only section that now needs updating is the actual MESSAGEDATA and this is where the actual requirements are fulfilled.

It’s fairly simple. The parsed output is a Python dictionary which is called alert. The values within alert are referenced by their element and output the value:

value = alert['element']

How do you know what elements are included? They’re actually shown in the parsed output from the parse function:

For example:

value = alert['status']

Will give an output of ‘ACTIVE’

In this example I will pull in a few elements that are included in the parsed alert output:

MESSAGEDATA = {

"sourcetype":alert['resourceName'],

"event":{

"Title": alert['AlertName'],

"Impacted_Object": alert['resourceName'],

"Source": alert['hookName'],

"Status": alert['status'],

},

}

And in a nutshell, that’s how to get vROps sending basic alerts to Splunk via a HEC.

But wait… the alert notifications doesn’t contain half the information that we need. We need to call back to vROps to get the rest of the information.

Stage 5 – Phoning home for more information

At this point, it’s going to basically be REST API calls back to vROps and this is fairly well documented at https://<VROPS FQDN>/suite-api/docs/rest/index.html

To make this work above the body of the Splunk function add some additional constants that will hold various authentication code and URLs.

# vRealize Operations Details

VROPSURL = "https://<FQDN>"

VROPSUSER = "admin"

VROPSPASSWORD = "password"

VROPSAPI = "/suite-api/api"

VROPSAPIALERT = "/suite-api/api/alerts"

Now in the body of the function, before the MESSAGEBODY variable is built, add some lines to build the URL of the alert and make a REST call back to vROps:

The vROps output needs to be in JSON format:

jsonHeader = {'accept': 'application/json'}

And build the correct URL for calling the alert:

ALERTURL = VROPSURL + VROPSAPIALERT + "/" + ALERTID

Now make a request to the URL passing the various authentication parameters and storing the output:

alertIDResponse = requests.get(ALERTURL , headers=jsonHeader, auth=(VROPSUSER, VROPSPASSWORD), verify=False)

Check the response code and collect the alert Definition ID number:

if alertIDResponse.status_code == requests.codes.ok:

alertDefID = alertIDResponse.json().get('alertDefinitionId')

else:

alertDefID = "Unable to find the alert"

Now alertDefID variable holds the definition ID and from this we can make another REST call and get the alert definition information. This will include a recommendation ID number (if the alert has any recommendations). Then you can make another REST call to vROps using the Recommendation alert ID and get the recommendation information.

Put all of this together and you can send Splunk the Alert, the definition and any recommendation.

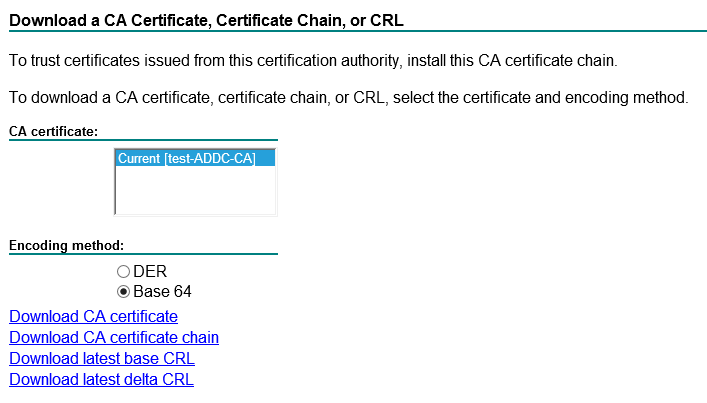

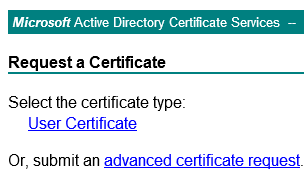

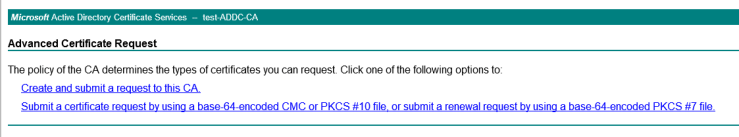

Click on Download a CA certificate

Click on Download a CA certificate